Microsoft has finally introduced a new and long waited feature for Dynamics 365 For Finance and Operations – the Business Events. In a nutshell, it’s a mechanism to create trigger points and send event notifications to external systems. Any business process, from posting an invoice to inserting a record in the database, can be set as an event trigger.

Out-of-the-box it offers many event triggers already built and ready to use, together with a couple of connectors available to plug-in your events: Service Bus Queues/Topics, Event Grid, Event Hub, Flow or a generic HTTP endpoint, making it compatible with virtually anything. The connection details for the endpoints are securely managed by Azure KeyVault. You can customize and add you own triggers and connectors.

To find out more Info and technical documentation check the official documentation here.

Designing a prototype

Recently I had to prototype an integration solution for a client, based on 2 principles:

- Minimize the customizations by using standard and out-of-the-box features as much as possible, leveraging existing tools and APIs;

- Decoupled and event-based pattern..

Design inbound integration interfaces for Dynamics 365 FinOps is pretty straight forward – data is exposed via OData API for real-time, Batch Data API for – as the name says – batch integrations or custom services can be tailored from scratch to expose any sort of data or business logic.

When it comes to outbound integrations, there are many ways to build your event-based solution. Identify where and when trigger your events is the key here, keeping in mind the notification should be lean and simple. When data needs to be exported, the payload shouldn’t contain your full data set unless you’re exporting a handful of fields only.

In our scenario we had to export batch data from multiple entities, each one with different schedules, in a mix of full push and deltas.

One option quickly discarded was to map and insert triggers on each business process. Triggers on data entities or target tables were also not viable due to the volume of customization required.

With that in mind, we’ve decided to leverage features from two internal frameworks, mixing Business Events and Data Management Framework (DMF).

On DMF we have a fully functional engine that handles large volumes of data, works with individual export projects, apply transformations, get the deltas, export in batch, set recurring jobs, save the output to storage blobs, and has a not ideal but useful UI to manage and check the history.

By using Business Events with DMF we leverage existing tools to easily build an event-based outbound integration and automate the process of saving/expose the output data and send event notifications.

The following diagram shows a simple overview of the solution, for both outbound and inbound interfaces:

For the prototype, Azure Service Bus plays the role of notification/transportation system and Logic Apps is the middleware solution that orchestrates the request/response flow.

Munib Ahmed wrote a very nice blog post with details about how to build this, you can check out his posts here and here, or just go directly to his GitHub repository here to download the sample code.

A business event customization is composed by 3 classes:

- Event handler class: define the trigger and filters to get the event/data.

- Event contract class: define the content for the notification message payload.

- Event class: the event itself, called by the handler class to initialize/populate the contract and apply any business logic if required.

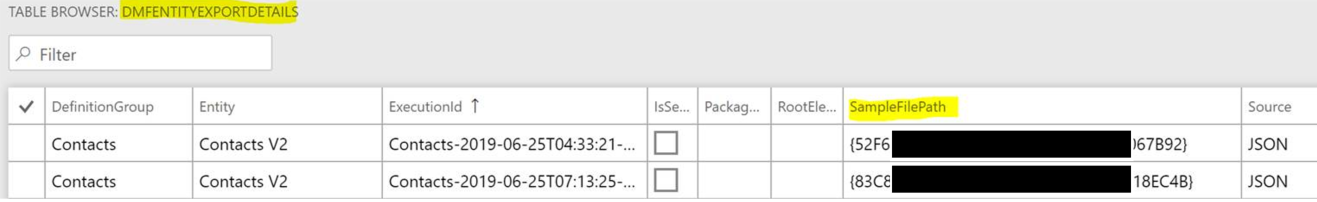

For the prototype, we’ll implement the trigger on the Insert action on DMFEntityExportDetails table. This table is part of the Data Management Framework base engine and it holds all the files generated by the export projects. The key field for us is the SampleFilePath, that contains the file unique identifier.

A bit of background on that – the files generated by DMF are saved in a temporary Azure Storage Blob by default (managed by Microsoft), and they sit there for seven days (as described in the official documentation here), being automatically delete after that.

During these seven days, we can generate a “direct access URL” as many times we need, because what we are actually generating is the key signature that is attached to the URL to grant read access to the file, and this signature has an expiry time (60 min by default). The file location itself doesn’t changes. The URL structure looks like this:

https://<account>.blob.core.windows.net/dmf/<filename.ext>?sv=2014-02-14&sr=b&sig=<signature>&st=<utc-start-datetime>&se=<utc-end-datetime>&sp=r

To regenerate the URL with a new key signature valid for another 60 minutes, we just need to call the standard class/method DMFDataPopulation::getAzureBlobReadUrl() and send the unique file ID as parameter.

The final prototype outbound design using business events is represented by the diagram below, followed by a step-by-step description.

Data Management Framework checks for new and updated records (1) from the selected data entities using a recurring data job (min recurrence 1 min). Every run that finds at least one record generates a file in the pre-defined format and save it to an internally managed Azure storage (2).

The Business Event engine monitors the DMF outcomes and triggers an event for every new file generated, sending a notification to Azure Service Bus containing the data project details and a direct link (URL) to the file (3). A shared access signature (SAS) token is embedded in the URL providing the necessary access.

The caller receives a notification via Service Bus (4), download the file using the URL provided (5), applies data transformation and field mappings (if/when required), and push the data to the legacy systems (6).

The result is simple but effective and works with almost no code customization.

Note

It’s technically possible to change the expiry time by re-implementing the DMFDataPopulation::getAzureBlobReadUrl() method at your own risk (I’ve never tried though, and do not recommend). Code below.

/// <summary>

/// Retrieves a read-only URL for an Azure blob identified by the provided file ID.

/// </summary>

/// <param name = "_fileId">Unique identifier of the blob to retrieve a link for.</param>

/// <returns>A read-only URL for the blob identified by <c>_fileId</c>.</returns>

public static str getAzureBlobReadUrl(guid _fileId, str category = #DmfExportContainer)

{

// If empty category is specified assume

// temporary storage

if(!category)

{

// temporary-file category

category = FileUploadTemporaryStorageStrategy::AzureStorageCategory;

}

str azureFileId = guid2str(_fileId);

var blobStorageService = new Microsoft.DynamicsOnline.Infrastructure.Components.SharedServiceUnitStorage.SharedServiceUnitStorage(Microsoft.DynamicsOnline.Infrastructure.Components.SharedServiceUnitStorage.SharedServiceUnitStorage::GetDefaultStorageContext());

// Fetch a read-only link that is valid for 60 minutes

var uploadedBlobInfo = blobStorageService.GetData(azureFileId, category, Microsoft.DynamicsOnline.Infrastructure.Components.SharedServiceUnitStorage.BlobUrlPermission::Read, System.TimeSpan::FromMinutes(60));

return uploadedBlobInfo.BlobLink;

}

Hi Fabio. Appreciate the post. Curious. Why do you have the arrow for #4 pointing to azure service bus? Vs the arrowing point towards pull notification? You describe it as “caller receives notification”. I’d argue if it’s a received notification, the arrow should be pointed the other direction. That would be a true push(synchronous) setup. Are security requirements, implying you can only intiate outbound requests and support pull integrations? Or is your arrow pointing the wrong direction? If this is a true push integration and your arrow is pointed the wrong direction, what firewall rules did you consider in the design? Thank you !! Seth

LikeLike

Hi Seth, good question. While the arrows on the first diagram shows only a simple data flow, on the second one I was a bit more specific as the LogicApps pulls the notifications from ServiceBus. This is different from EventGrid or Functions, for example, that receives a push notification and enables a true reactive programming. You can build a synchronous or asynchronous pattern regardless of the tool used – this is more related to how you build your code/calls.

Regarding security, that depends of how you’re designing the legacy/on-premises communication. There are many ways, scenarios and constraints to be considered for the design and that’s out of my scope here. Just as an example, your legacy application can expose a REST/HTTPS service endpoint that won’t require any major firewall changes, or even a SFTP in between for file-based integration. In both cases it’s a matter of make sure the ports are open and your caller’s IPs are whitelisted.

LikeLike

I really appreciate your help. This exta bit of instruction will be executed.

LikeLike